Despite the many benefits and practical applications of artificial intelligence, it has several threats that we all need to be aware of. Some of these threats could become a huge problem in the future if they are not addressed ahead of time. According to Elon Musk, AI can potentially lead to the next world war if not properly dealt with.

In fact, Elon considers AI as one of the biggest existential threats to humankind. That is why it is important to identify the potential threats of AI so that we figure out how to deal with them before they create severe problems for us right now and in the future. In today’s article, we will discuss the threats of AI that could affect us now or in the next couple of years. Without any further ado, let’s jump right in!

The Threats and Negative Impacts of AI

Job loss

When companies start using AI-powered tools to do the work that was previously done by humans, a lot of people will lose their jobs. Studies show that 86% of CEOs report that AI is considered mainstream technology in their office as of 2021. Executes and business owners are embracing AI tools because they are more productive, less costly, and more accurate than humans.

Some of the popular tasks that artificial intelligence tools can do include proofreading documents, market research analysis, accounting and bookkeeping, self-driving of cars, and more. In the next couple of years, millions of people doing these jobs could be rendered jobless if they don’t acquire more sophisticated skills that can’t be replicated by AI.

Of course, AI will not replace every person doing the jobs we have mentioned above. However, the number of employees that companies will require in such positions will greatly be decreased since most of the tasks will be done by AI-powered tools. For example, when you consider customer support, AI tools will take on repetitive tasks and a few people will be left to handle tasks that require human judgment and critical thinking.

Social media content ranking algorithms use machine learning and AI to favor content that users engage with the most so that it is shown to even more users on the platform. However, these algorithms are not yet good enough to filter out fake information that could potentially be harmful to some people using these platforms.

So, wrongdoers can use social media to push their agendas since their posts can be shown to more people if they get engagement in the first few minutes after publishing them. The algorithms that social media platforms use assume that all content that gets a lot of engagement is good for the users.

Yes, these algorithms may help us to find content that might be of great importance to us, but a lot of work needs to be done to improve them so that they don’t become megaphones for spreading fake or harmful information. In the last couple of years most the popular social platforms, including Twitter and Facebook have created more robust tools to deal with the spread of fake information on their platforms.

These fake information detection tools are backed up by real content moderators to deal with fake content that machines may not be able to identify. However, the entire content moderation system, including AI and humans still has the language barrier limitation. Most of the native languages in Asia and Africa are yet to be supported by major social media sites.

So, if someone uses their local language to incite violence in any of these platforms, their message could easily be amplified by the AI-powered ranking algorithms of these social sites, potentially leading to fatal consequences. All in all, there is still a lot to be done to fight misinformation and disinformation on social media.

The rise of deep fakes

A deepfake is an AI-generated video that mimics a real human speaking or doing something. If you are not careful enough, you may not be able to distinguish between a deepfake and a real video. A deepfake that is well-generated can mislead people to believe the information that is being passed over to them through the video.

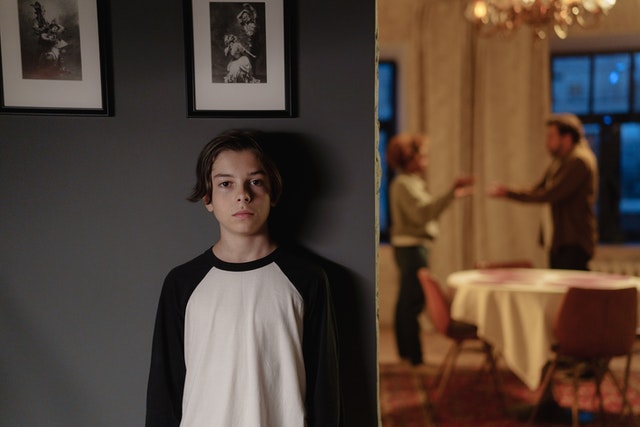

There is a lot of potential damage that can be caused by deepfakes if the wrong people get access to the tools used to create them. One could create a deepfake video to blackmail you at your workplace or create a deepfake aimed at creating mistrust in your family. Basically, it is possible to create any kind of deep fake if you have the right tools.

Deepfake videos are usually shared using social media and instant messaging platforms, making it pretty easy to make them go viral in just a few hours. As AI continues to get better, the tools used to create these videos will only become more accurate, further escalating the problem of misinformation and the spreading of fake news on the internet.

This video shows one of the most popular deep fakes that have come to the internet. It is pretty easy for many people to fall for this kind of video if they don’t get prior warnings that what they are about to watch is a deep fake.

AI weapons

One of the most feared movements in the world right now is the weapons that utilize the power of AI to make independent decisions. Such weapons can certainly get into action without any human interference. In an open letter written by AI and Robotics researchers, they posed the question of whether to start a global AI arms race or to prevent it from starting.

They went on to say that if a major military power pushes ahead with AI weapon development, a global arms race is virtually inevitable, and the endpoint of this technological trajectory is obvious: autonomous weapons will become the Kalashnikovs of tomorrow. We can’t deny the possibility that this could already be happening, now that every government is trying to show its powers by heavily investing in technology.

Putting AI in control of dangerous weapons is an existential threat to humans that can only be avoided if AI is not given such power.

Algorithmic trading could lead to a financial crisis

A bug or simple mistake in a trading algorithm can cause a huge impact on the market. For those hearing about this term for the first time, Algorithmic trading is the type of trading that occurs when a computer executes trades based on pre-programmed instructions. If any of the instructions in these algorithms get compromised, the market could be significantly impacted. Our hope is that the programmers building these stock trading tools don’t ever mess up.

Artificial intelligence could negatively affect human connections

As machines continue to get better at the things that were initially done by humans, our dependence on other people will gradually reduce. This is a threat to human connections that keep families and organizations together. For example, factories that used to unite thousands of employees now hire just a few people whose role is to oversee the operation of the automated machines.

By nature, humans are social animals and our happiness and satisfaction levels in life largely depend on the connections we have with other humans. So, a future that is filled with AI-powered tools and automation could potentially reduce the bond between employees at their workplaces and even families.

Studies done by the World Economic Forum and Statista show that global internet users spend an average of 2 hours and 27 minutes per day on social media. This amounts to over 17 hours every week. People spend this much on social media, thanks to the smart content ranking algorithms that will show you something nice every time you are about to close the app.

At the of the day, the time that could be productively spent making meaningful connections with our families and friends is instead spent on machines that are programmed to take away our attention whenever we get any kind of boredom.

Accidents and safety concerns

This mainly applies to autonomous vehicles, one of the most popular applications of artificial intelligence. If the self-driving systems built by the likes of Tesla, BMW, and Ford ever get a major issue, the driver in the car along with other road users could be put at risk of losing their lives. Despite the fact that self-driving vehicles could be safer than human drivers, there are still several crashes these cars cannot avoid.

A 2020 study by Insurance Institute for Highway Safety (IIHS) indicated that autonomous vehicles would still struggle to avoid over 66% of crashes that are currently caused by humans. However, in the worst-case scenario, a self-driving car could cause way more accidents if something serious goes wrong with its self-driving system.

That is why all the automakers at this point still recommend users to remain attentive even when they engage the self-driving system.

How to Mitigate AI Threats

Despite the threats caused by AI, it is still an invention that we need to use, thanks to its many benefits. In this section, I will take you through some of the ways the above threats and negative impacts of AI can be dealt with to ensure the future is safe for all of us. We shall look at the possible solutions for each threat we shared.

Diversification of skills to avoid job loss

If the job you’re currently doing is being threatened by AI, it is best to try learning some new skills that can’t be replicated by machines. While in the job market, know that you aren’t only against your fellow humans, but machines too. For now, and in the foreseeable future, AI tools may not be able to create, conceptualize, or plan strategically. So, building skills that involve creating, conceptualizing, and planning is the way to go.

Social media sites need to prioritize user safety

Building tools that can effectively deal with fake information on the internet is still a complex task. However, this task becomes even harder because the ranking algorithms of these sites are built to amplify engaging content because that’s what keeps users coming back to the platform.

This has encouraged many wrongdoers to take advantage of these tools to also amplify their message. I believe things would be a lot different if social media sites prioritized their users’ safety.

Video editing tools for deepfakes should be regulated

Today, anyone can get access to editing tools for deepfakes and use them in whatever way they wish. What if there was a regulation that requires these editing tools to add a “deepfakes” badge to all the videos they generate? This would significantly lower the impact caused by the fake information portrayed in these deep fakes.

Regulating the creation of Lethal autonomous weapons (LAWs)

Countries should come together to create strict regulations on who should and who should not make these kinds of weapons. Just like we have clear laws on nuclear weapons, there need to be clear international regulations Regulating the creation of AI-powered military equipment.

Final Thoughts

We covered most of the major threats that could be created by AI now and in the future. We are already experiencing some of the negative impacts of AI that I shared above. However, it is possible to make AI safer by carefully building AI tools that put humans first. There should also be clear laws put in place by governments and other authorities to regulate the use of potentially dangerous AI tools like deep fakes video editors.

Otherwise, AI is an exciting technological advancement with the potential of further simplifying our lives if properly used.